Spot

Founded Year

2018Stage

Series B | AliveTotal Raised

$62MLast Raised

$40M | 6 mos agoAbout Spot

Spot provides a system for security cameras that combine video management with AI on a dashboard. Users can access their video footage anytime, anywhere. Its video intelligence uses AI to find exactly what the user is looking for. The company was founded in 2018 and is based in Los Altos, California.

Spot's Product Videos

Compete with Spot?

Ensure that your company and products are accurately represented on our platform.

Spot's Products & Differentiators

AI Camera System

Spot AI's Camera System provides users with an easy-to-use dashboard, powerful NVR, and the ability to get cameras at no cost or for customers to keep their own. The dashboard allows customers to see all of their locations from one place, use AI features to search footage and improve operations. The NVR uses on-edge AI processing to ensure customers are able Instantly access video and have the flexibility to plug into any network configuration in minutes.

Research containing Spot

Get data-driven expert analysis from the CB Insights Intelligence Unit.

CB Insights Intelligence Analysts have mentioned Spot in 1 CB Insights research brief, most recently on Mar 14, 2023.

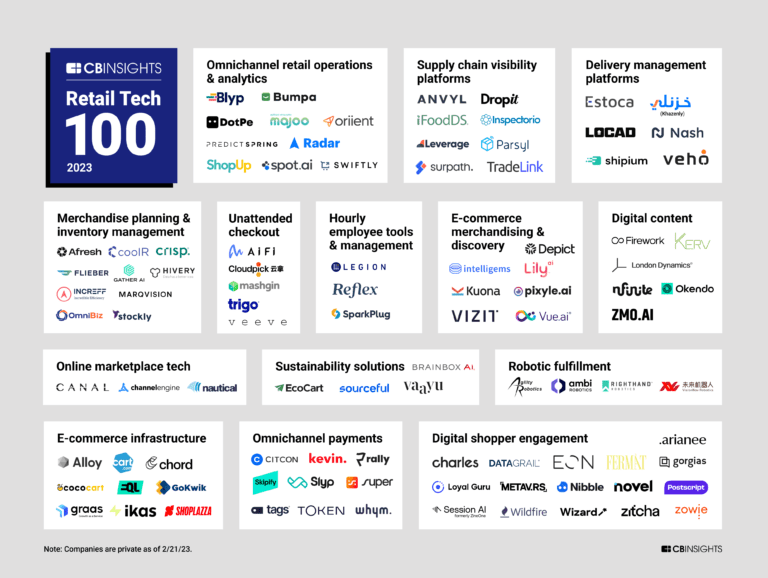

Mar 14, 2023 report

Retail Tech 100: The most promising retail tech startups of 2023Expert Collections containing Spot

Expert Collections are analyst-curated lists that highlight the companies you need to know in the most important technology spaces.

Spot is included in 3 Expert Collections, including Artificial Intelligence.

Artificial Intelligence

10,624 items

This collection includes startups selling AI SaaS, using AI algorithms to develop their core products, and those developing hardware to support AI workloads.

Store tech (In-store retail tech)

1,543 items

Startups aiming to work with retailers to improve brick-and-mortar retail store operations.

Retail Tech 100

100 items

The winners of the 2023 CB Insights Retail Tech 100, published March 2023.

Latest Spot News

Dec 12, 2022

Share A talented scribe with stunning creative abilities is having a sensational debut. ChatGPT, a text-generation system from San Francisco-based OpenAI, has been writing essays, screenplays and limericks after its recent release to the public, usually in seconds and often to a high standard. Even its jokes can be funny. Many scientists in the field of artificial intelligence have marveled at how humanlike it sounds. And remarkably, it will soon get better. OpenAI is widely expected to release its next iteration known as GPT-4 in the coming months, and early testers say it is better than anything that came before. (1) But all these improvements come with a price. The better the AI gets, the harder it will be to distinguish between human and machine-made text. OpenAI needs to prioritize its efforts to label the work of machines or we could soon be overwhelmed with a confusing mishmash of real and fake information online. Advertisement For now, it’s putting the onus on people to be honest. OpenAI’s policy for ChatGPT states that when sharing content from its system, users should clearly indicate that it is generated by AI “in a way that no reader could possibly miss” or misunderstand. To that I say, good luck. AI will almost certainly help kill the college essay. (A student in New Zealand has already admitted that they used it to help boost their grades.) Governments will use it to flood social networks with propaganda, spammers to write fake Amazon reviews and ransomware gangs to write more convincing phishing emails. None will point to the machine behind the curtain. And you will just have to take my word for it that this column was fully drafted by a human, too. AI-generated text desperately needs some kind of watermark, similar to how stock photo companies protect their images and movie studios deter piracy. OpenAI already has a method for flagging another content-generating tool called DALL-E with an embedded signature in each image it generates. But it is much harder to track the provenance of text. How do you put a secret, hard-to-remove label on words? Advertisement The most promising approach is cryptography. In a guest lecture last month at the University of Texas at Austin, OpenAI research scientist Scott Aaronson gave a rare glimpse into how the company might distinguish text generated by the even more humanlike GPT-4 tool. Aaronson, who was hired by OpenAI this year to tackle the provenance challenge, explained that words could be converted into a string of tokens, representing punctuation marks, letters or parts of words, making up about 100,000 tokens in total. The GPT system would then decide the arrangement of those tokens (reflecting the text itself) in such a way that they could be detected using a cryptographic key known only to OpenAI. “This won’t make any detectable difference to the end user,” Aaronson said. In fact, anyone who uses a GPT tool would find it hard to scrub off the watermarking signal, even by rearranging the words or taking out punctuation marks, he said. The best way to defeat it would be to use another AI system to paraphrase the GPT tool’s output. But that takes effort, and not everyone would do that. In his lecture, Aaronson said he had a working prototype. (2) Advertisement But even assuming his method works outside of a lab setting, OpenAI still has a quandary. Does it release the watermark keys to the public, or hold them privately? If the keys are made public, professors everywhere could run their students’ essays through special software to make sure they aren’t machine-generated, in the same way that many do now to check for plagiarism. But that would also make it possible for bad actors to detect the watermark and remove it. Keeping the keys private, meanwhile, creates a potentially powerful business model for OpenAI: charging people for access. IT administrators could pay a subscription to scan incoming email for phishing attacks, while colleges could pay a group fee for their professors — and the price to use the tool would have to be high enough to put off ransomware gangs and propaganda writers. OpenAI would essentially make money from halting the misuse of its own creation. Advertisement We also should bear in mind that technology companies don’t have the best track record for preventing their systems from being misused, especially when they are unregulated and profit-driven. (OpenAI says it’s a hybrid profit and nonprofit company that will cap its future income. ) But the strict filters that OpenAI has already put place to stop its text and image tools from generating offensive content are a good start. Now OpenAI needs to prioritize a watermarking system for its text. Our future looks set to become awash with machine-generated information, not just from OpenAI’s increasingly popular tools, but from a broader rise in fake, “synthetic” data used to train AI models and replace human-made data. Images, videos, music and more will increasingly be artificially generated to suit our hyper-personalized tastes. (3) It’s possible of course that our future selves won’t care if a catchy song or cartoon originated from AI. Human values change over time; we care much less now about memorizing facts and driving directions than we did 20 years ago, for instance. So at some point, watermarks might not seem so necessary. Advertisement But for now, with tangible value placed on human ingenuity that others pay for, or grade, and with the near certainty that OpenAI’s tool will be misused, we need to know where the human brain stops and machines begin. A watermark would be a good start. More From Bloomberg Opinion: • ChatGPT Could Make Democracy Even More Messy: Tyler Cowen • Musk Must Preserve Twitter’s Most Vital Function: Gearoid Reidy (1) ChatGPT is based on a series of language models called GPT-3.5. GPT stands for Generative Pre-Trained Transformer. (2) Aaronson also pointed out that the easiest way to track GPT text would be for OpenAI to store all its output in a central, giant database, which people could consult for matches. But that could lead to a privacy breach. It could reveal how other people have used the GPT model. Advertisement (3) Gartner estimates 60% of all data used to train AI will be synthetic by 2024, and it will completely overshadow real data for AI training by 2030. This column does not necessarily reflect the opinion of the editorial board or Bloomberg LP and its owners. Parmy Olson is a Bloomberg Opinion columnist covering technology. A former reporter for the Wall Street Journal and Forbes, she is author of “We Are Anonymous.” More stories like this are available on bloomberg.com/opinion ©2022 Bloomberg L.P.

Spot Frequently Asked Questions (FAQ)

When was Spot founded?

Spot was founded in 2018.

Where is Spot's headquarters?

Spot's headquarters is located at 262 Convington Road, Los Altos.

What is Spot's latest funding round?

Spot's latest funding round is Series B.

How much did Spot raise?

Spot raised a total of $62M.

Who are the investors of Spot?

Investors of Spot include Bessemer Venture Partners, Redpoint Ventures, Scale Venture Partners, Stepstone Group, MVP Ventures and 5 more.

Who are Spot's competitors?

Competitors of Spot include Verkada and 5 more.

What products does Spot offer?

Spot's products include AI Camera System.

Who are Spot's customers?

Customers of Spot include Camel Express Car Wash, Splash Car Wash and Tombigbee Electric Power Association.

Compare Spot to Competitors

Verkada is a physical security platform used to protect people and assets in a privacy-respecting manner. The company's six product lines - video security cameras, access control, environmental sensors, alarms, visitor management, and mailroom management - provide visibility of building security through an integrated, secure cloud-based platform. The company was founded in 2016 and is based in San Mateo, California.

Eagle Eye Networks was created to make video security easier for all. The company offers web and cloud technologies to make cameras easier to use, more accessible, and more robust.

Gatekeeper provides patented, automated technology and security solutions designed to minimize merchandise and asset loss, track and retain shopping cart fleets, reduce retailers’ labor expenses and increase employee safety. On October 30, 2019, Gatekeeper was acquired by Graham Partners. Terms of the agreement were not disclosed.

Intenseye video analytics solutions. The company develops AI-powered video analytics used to protect occupational health and safety in the workplace. it was founded in 2018 and is based in New York, New York.

Red Piranha is an Australian cyber security start-up that manufactures and supplies end-to-end security solutions to safeguard information across the entire network and its borders helping customers maintain confidentiality and integrity.

GoX Labs provides workforce injury risk predictive analytics and risk management tools on its dashboard. It uses IoT (Internet of Things) wearables that train workers for wellness and safety with instant haptic feedback. The company was founded in 2014 and is based in Phoenix, Arizona.

Discover the right solution for your team

The CB Insights tech market intelligence platform analyzes millions of data points on vendors, products, partnerships, and patents to help your team find their next technology solution.